A Better ToolVox Shortcut

ToolVox AI v1.0.7 is out! Optimized and Improved. New Features to Interact and Customize Chat GPT or Open Router LLM Models. Useful Lessons on Shortcut Optimization

My shortcut to interact with Chat GPT (and other LLMs) has received a significant update and I’m happy to share some of the changes with you.

I have integrated it with Open Router’s API, which allows you to use models that would not be available to many users otherwise (I’m talking about Claude V1, Claude V2, or Chat GPT 4, for example). Since you can now use these models, I have also renamed the Shortcut as ToolVox AI (instead of ToolVox GPT).

I have optimized the logic behind the shortcut making it faster than before. This is perhaps one of the most noticeable improvements.

I have discontinued the DataJar version of the shortcut. Instead, now we have two options for saving and resuming conversations within the same shortcut: Bear notes & Bookmarks.

I have added an auto-save for the most recent conversation. This speeds up the saving of bookmarks and also serves as a backup in case of timeouts or errors. The most recent conversation can always be accessed from the main menu.

The user’s setup of ToolVox AI is now part of a separate shortcut which—when run—saves all the user’s data in a json file inside the Shortcuts folder. This allows future updates of ToolVox AI without replacing the user’s personalized setup and presets.

The previous helper shortcut is no longer necessary: ToolVox AI now runs as an independent shortcut and only reads the user’s settings from the created json file.

Simple non-AI presets for text transformations must now be executed with Javascript. I am providing some samples in the ToolVox AI Setup shortcut.

Simple presets can no longer become AI conversations. Keeping this processing separate has further helped optimize the speed of the shortcut.

You can now use

{txt}as a placeholder for user’s input when setting a “FirstPromp” value in your presets, or when adding a custom prompt to a shared text.There’s a new optional “AutoPrompt” setting which repeats the “FirstPrompt” all throughout the conversation. Useful to keep the model in track, avoid hallucinations, or clarify requests.

I’ve added compatibility to various settings which allow for more customization and experimentation of the model you choose for your conversation. I’m talking settings like frequency penalty, presence penalty and top_p value. You have default fallback values but you can also change them in any of your presets.

You can now set a max_tokens value per model. This value determines the maximum number of tokens that the chosen model generates in a response.

You can now set a limit to the number of messages to be kept in the context of your conversations.

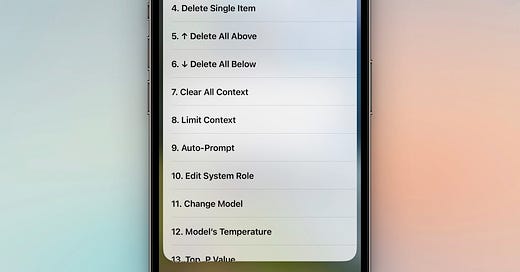

I’ve simplified and expanded the use of prompt commands. Among one of the new commands there’s a “console” menu which can be triggered anytime in the middle of a conversation. With this, you can now easily change models, remove items, or modify A LOT of settings without leaving the chat.

I have simplified my previously written guide. I must admit that in my excitement I may have gone overboard with the amount of content I shared the first time around. I’ve now tried to keep the guide to the most basic, and added explanations to the setup shortcut itself.

Lessons on Shortcut Optimization

Here’s some advice for anyone that is into building shortcuts.

One of the things that bothered me the most with the first version of ToolVox AI had to do with its speed. Unfortunately there’s not a one-size-fits-all solution for optimizing shortcuts. However, after lots or research and testing I discovered there’s the “loading” speed and the “processing” speed and both need a different approach.

Loading speed has to do with the size of the shortcut. To improve this:

I tried to reduce the amount of used variables.

I tried to combine actions and get rid of redundant ones.

I tried to keep the number of shortcut actions as low as I could.

ToolVox AI is still a BIG shortcut, even after I removed a lot of actions and variables. However, unlike before, I believe loading speed is now bearable. It is important to mention that loading speed differs from device to device. It is much faster loading on my iPhone 14 Pro than in my Macbook Air M1.

Once ToolVox AI loads, it is much faster than before and the different speeds between devices is less noticeable, which takes me to the next point.

Processing speed has to do with the actions within the shortcut itself.

Speed was greatly improved by keeping all the processing within one same shortcut and not relying on actions that had to be loaded from other shortcuts.

Actions that involve other apps (like Data Jar) are slower than native shortcut actions. This was one of the reasons I opted to discontinue the Data Jar version.

There’s some shortcut actions, specifically, which are very slow: match, split, combine, and repeat. Whenever possible I’ve now tried to use regex replacements instead of matches, and add to variables instead of splitting them. For combining it’s much better to place a variable inside a text box and assign that to a variable.

You must be aware that some actions perform faster or slower on different devices. This required a lot of testing, and lots of trial & error. I built an entire ToolVox AI version running on several Javascript blocks that was super fast on my phone, only to find that it was terribly slow on my MBA M1. I decided to scrap the Javascript idea altogether.

I will continue to optimize and update ToolVox AI if I continue to have ideas to improve it. Feel free to check it out if you haven’t already and all feedback is welcome :)

If you liked this you may also enjoy some content I have up on my YT Channel! I don’t hang around social media a lot, but when I do I’m on IG or Twitter. You can also check out some of my online classes, listen to my music, or in case you haven’t already, subscribe to my weekly newsletter. Thank you for reading!